Ethics in Artificial Intelligence

Review of a very interesting meetup by Dr McKillop

I recently participated in a meetup with the promising title “Ethics in AI”. Dr. Chris McKillop conducted the meetup, and she did not only has a lot of theoretical background under her arm, but also a great deal of experience with working on the field of Data Science and AI.

(It was also a great opportunity to visit the SAS Institute, which is a pretty impressive building, in the fanciest place of Toronto’s downtown.)

Her exposition started with the hype around AI and how everyone’s talking about it, and how news love to discuss how we’re soon close to having a Terminator on our streets, but that’s very far from where we are. She explained that this kind of hype really doesn’t help, bust just generates an image that helps tilt public opinion with unrealistic expectations and pseudo-scientific facts, which are a good recipe for a disaster policy taking.

From there we jumped to the responsibility that people have when working on AI. A wonderful point she made here is that everything we do, whether we want it or not, has an impact on the world around us. So, Sophia the robot is an example of people being misdirected by what they think is strong AI when they’re talking to a pre-programmed chatbot. She compared it to the original Mechanical Turk, where people would be really scared of it without knowing what it really was (a well-pulled ruse).

And Sophia’s approach to winning us is to appear friendly. I find this… debatable, I think she falls on the creepy side… but this same approach turned backwards is what we get from the Boston Dynamics team, McKillop explained. Their robots are not designed to appear friendly, but rather to inspire fear. And that might not be a conscious decision made by someone, but those decisions do have an impact on the public about the technology involved.

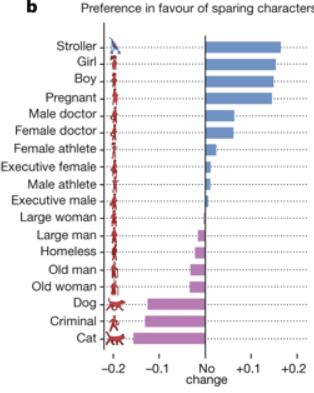

Then we took a turn on ethics itself, starting with the well-known trolley dilemma and some very interesting facts about us with it.

Did you know, in the average persons’ point of view, a criminal’s life is worth more than a cat’s life? But less than a dog’s life? No? Check The Moral Machine experiment, by Awad et al. Did you know that people are more likely to save men than women? Does it surprise you?

This is a very good point that McKillop made – AI is not only good as a tool but it also reflects part of our humanity in it. Our virtues and our flaws. As the technology gets better, we will learn more about us with it.

And here the discussion also centered about the huge problems that we, humans, inherently have to do ethics correctly, for which we covered a very simple yet known example: cognitive biases. Have you heard of them? Maybe there are 4 or 5, maybe around 10, right? Nope, wrong. There are more than 90+ active cognitive bias in our brains, every day, all the time.

So, when looking into automating our work, specially through AI, where we decide that something needs to be done with a certain level of intelligence. Specially when we trust more of our lives into it, we need to put ourselves in each other’s shoes and think about what this will do to them. Are we being fair?

All in all, it was a very interesting and very productive discussion. I hope I get to tell you about more meetups like these.