DAIN

About a month ago, a Youtube video crossed my path. It was a video by the Lumière brothers (1896), but in full 4k, 60fps. This caught my attention, and of course, I wondered how can we make the technology behind it more accesible to people.

Before we move forward, you can take a look at the video yourself.

The video is pretty impressive, and it looks incredibly recent even though it’s not.

So… how did we get to a real-life version of the fictional “enhance”?

Enhancing videos

The creator (Denis Shiryaev) took several steps from the original:

Upscaling

Upscaling is the name given to an expansion of the source. In the case of videos, it means adding more pixels to each of the images of the original video. The difficult part is finding out which colors do the pixels need to be so that the shapes are preserved, or so that the textures look natural. It’s not the same thing adding pixels to a picture of a plain green wall (fairly easy) than adding pixels to a low-resolution face (very difficult).

For this, they used the TopazLabs Gigapixel AI commercial product.

Colorization

Not the case for this video, but when dealing with old media, it’s very likely that colors need to be restored. This has the same challenges as upscaling: when looking at an old video, where the information stored is in shades of grey, which color should each pixel have? That’s not an easy question.

Interpolation

And finally, interpolation is needed. Interpolation is calculating a middle unknown-data point from other existing data points.

I’ll expand a bit more on this particular concept.

As you might know, videos are just a sequence of images. When they run at 24+ fps (frames per second), we se them as a natural animation. The more frames per second an animation has, the more fluid it looks like.

Most gamers will be familiar with the concept, as ideally, they strive for 60fps. 60fps matches the refresh rate of most screens (60 Hz), which means that the game is generating as much as the screen can show, and not a bit more. Note: there are screens with faster refresh rates, but for most of us, 60fps is what we have.

This is the difference between 10 fps and 60 fps.

This is exactly what was done with DAIN.

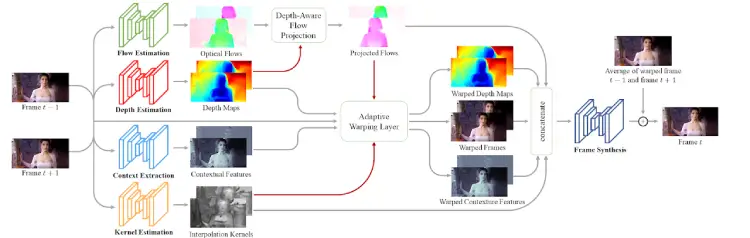

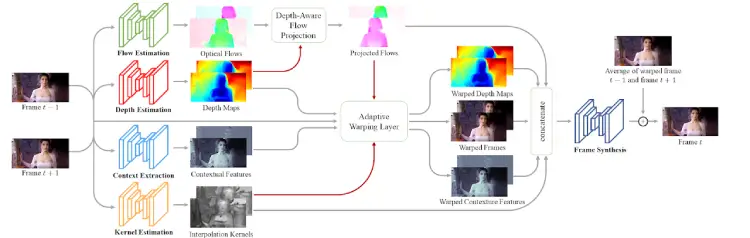

DAIN

DAIN stands for “Depth Aware video frame INterpolation”. It’s basically a neural network that will calculate the middle frame that should be between frame1 and frame2. However, it’s not a simple average, because that does not give such good results. Instead, it considers a few filters that adapt to the depth of the images, and creates a more crisp interpolation for nearby-objects and not for far-away objects.

As you might see from the network architecture, DAIN needs to five different convolutional networks, along with a few other layers. This means that it requires a lot of processing power.

This is why DAIN was created to be used with NVIDIA cuDNN. This set of libraries makes it efficient to create parallel code to be used with powerful NVIDIA GPUs, but that’s also part of the problem: it can only be run in computers that have those kind of GPUs.

Google Colab

However, the Google Colaboratory is a wonderful place where we can freely access instances of computers with GPUs and even TPUs, for free. Of course, it is meant for research purposes, but I wanted a place where we could experiment with this code without having to pay a lot of money.

Along with the help of Styler00Dollar (who’s other nickname is a really hilarious sudo rm -rf / --no-preserve-root), we’ve integrated the systems available at Google Colab, along with ffmpeg and some custom python coding, in which we’re able to take most source videos and easily output some interpolated and improved videos. In fact, the gifs I used as examples above are actually made with our version of the script.

Our code will:

- Allow you to indicate what input file and output files you want

- Allow you to set the output FPS desired

- Allow you to specify the location of the input video frames

- Allow you to specify the location of the output video frames

- Allow you to specify if you want the last and first frames interpolated (for creating looped animations)

- Connect to your Google Drive, so that videos can be loaded / saved there

- Check the version of the NVIDIA GPU available

- Download the latest version of the DAIN code and build it

- Use OpenCV to detect the fps of the input

- Use ffmpeg to get all of the frames of the input as individual images

- Run DAIN for each pair of

(frame_n, frame_n+1), generating as many in-between frames required to achieve the target FPS - Use ffmpeg to get all of the generated frames into a new video

You can check that version of our code in my copy of the repository (AlphaGit/DAIN) or Styler00Dollar’s version (Styler00Dollar/Colab-DAIN).