TAS PC

Terraform-AWS Serverless Producer-Consumer Module

I needed to setup some infrastructure in AWS but did not want to make modifications each time I needed them. What a better opportunity than this one to learn Terraform.

So I created a little module that sets up a small producer-consumer serverless (lambda-based) architecture, powered by a SQS queue.

Starting with Terraform

What I like the most about Terraform is its extensive documentation, mostly on the modules that Hashicorp provides. I’ve been using the AWS provider extensively now and the documentation is just wonderful.

However, this is not the case for most community modules. I initially started by trying out third party modules that could make my work easier and… mostly undocumented, outdated, and left behind for previous versions of the terraform tool.

Aside from that, the syntax is pretty easy to understand, it mostly works like this:

resource "resource_type" "resource_name" {

property = value

}

And that’s about it!

TAS-PC

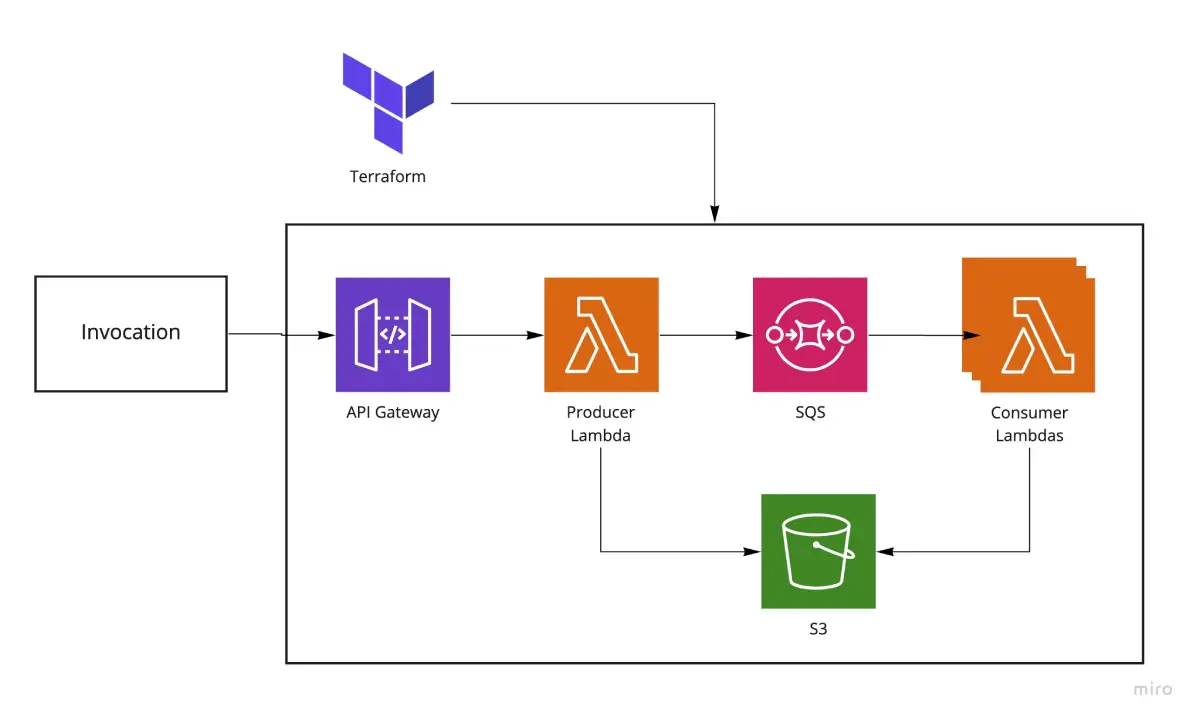

The module I built creates the following architecture:

As you can see, there is the main terraform module that gives places to everything else.

Then there’s an API gateway which automatically creates a stage and a deployment, with a specific route setup with an integration. All of this is to generate a public facing HTTP endpoint that can be used right away. Of course, future versions of this can allow for authorization lambdas, or IP restrictions, or API Keys or any other sort of work that you might want to do about gateways. For my particular use case, this is not needed right now.

The integration configured with the API Gateway is a Lambda for which the source code is packaged and uploaded from the module itself. From a configuration in the module you can specify the source of those files, the runtime, and the function handler. Basically, you can make it yours with three lines of code. I did already include an example so that it can work right out of the box.

This function is setup to have permissions for a queue on which it can write messages. The queue is also setup with an event source that will trigger consumer lambda functions to process the messages from the queue. In the same fashion, the consumer lambda functions can be configured with three settings to completely customize their behavior.

Finally, and I haven’t gotten to this point yet, I will add an option for some kind of shared storage. Based on my current needs, this will be an S3 bucket but I don’t see why I couldn’t be a Dynamo DB or even a relational DB. Time will tell!

All in all, a pretty simple concept, now with the joy of automation!

You can see the full module at is GitHub repository.